상세정보

미리보기

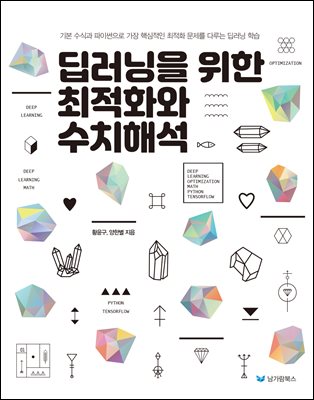

딥러닝을 위한 최적화와 수치해석

- 저자

- 황윤구,양한별 공저

- 출판사

- 남가람북스

- 출판일

- 2025-12-12

- 등록일

- 2026-01-23

- 파일포맷

- PDF

- 파일크기

- 25MB

- 공급사

- YES24

- 지원기기

-

PC

PHONE

TABLET

웹뷰어

프로그램 수동설치

뷰어프로그램 설치 안내

책소개

이 책은 텐서플로(Tensorflow)를 사용하여 다양한 딥러닝 모델들을 학습하는 방법과 최적화 이론을 다룬다. 딥러닝에 관련된 많은 수학 이론들이 있지만, 가장 핵심적인 최적화 문제를 중심으로 딥러닝을 학습한다.현재까지 출판된 딥러닝 관련 도서와 강의들은 최적화 이론에 대해서 다루지 않거나 아주 간단하게 다룬다. 하지만 최적화 이론을 이해하기에는 턱없이 부족한 양이다. 이 책에서는 가장 먼저 최적화 이론을 소개하고, 최적화 문제는 텐서플로를 사용하여 푼다. 이러한 방식은 딥러닝 모델을 텐서플로를 사용하여 학습시키는 경우에도 변하지 않는다. 사실 텐서플로 외에도 파이토치(PyTorch), 케라스(Keras) 등 많은 딥러닝 패키지들이 있다. 이들 모두는 최적화 문제를 푸는 것이 최종 목표이다.

저자소개

한국과학기술원에서 전산학과를 전공하고, 연세대학교 계산과학공학과에서 전자공학 석사를 졸업했다. 삼성메디슨, 엔비디아에서 근무했고, 현재는 지멘스 헬시니어스에서 근무하고 있다.

목차

이 책을 내며...들어가며...PART 1 프로그래밍 준비 작업 Chapter 01 개발환경 설정하기 1.1 아나콘다(Anaconda) 설치하기 1.1.1 윈도우(Windows)에서 설치하기 1.1.2 macOS에서 설치하기1.1.3 터미널(Terminal) 실행 방법 1.1.4 개발환경 생성과 삭제 그리고 패키지 설치 1.1.5 개발환경 활성화와 비활성화 1.1.6 개발환경 내에 패키지 설치하기 1.1.7 개발환경 내보내기와 불러오기 1.2 텐서플로(TensorFlow) 및 관련 패키지 설치하기 1.2.1 yml을 통해 불러오기 1.2.2 yml없이 직접 설정하기 Chapter 02 주피터 노트북과 파이썬 튜토리얼 2.1 주피터 노트북(Jupyter Notebook) 2.1.1 파이썬 코드 실행하기 2.1.2 마크다운(Markdown) 2.1.3 편리한 기능 소개 2.2 파이썬 기초 문법 2.2.1 변수 선언 및 함수 선언, 그리고 익명함수 2.2.2 주요 변수 타입 2.2.3 for문(for loop) 2.2.4 if문(if statement) 2.2.5 제너레이터(Generator) 2.3 자주 사용되는 파이썬 문법 패턴 2.3.1 데이터 타입마다 다른 for loop 스타일 2.3.2 zip가 들어간 for loop 2.3.3 한 줄 for문 2.3.4 파일 읽기/쓰기 2.4 numpy array 2.4.1 n차원 배열(Array) 2.4.2 배열의 모양(Shape) 2.4.3 전치 연산(Transpose) 2.4.4 Reshape 2.4.5 배열 인덱싱 2.5 시각화 패키지(matplotlib) 튜토리얼 2.5.1 분포도(Scatter Plot) 그리기 2.5.2 페어플랏(Pair Plot) 그리기 2.5.3 단일변수 함수 그래프 그리기 2.5.4 여러 그래프를 한 눈에 보기 2.5.5 그래프 스타일링 2.5.6 다변수 함수 그래프 그리기 Chapter 03 텐서플로 튜토리얼 3.1 텐서플로 설치 3.2 텐서플로 구조 이해하기 3.2.1 그래프(Graph) 3.2.2 텐서(Tensor) 3.2.3 연산(Operation) 3.3 연산의 시작 시점 3.4 주요 타입 3가지 3.4.1 Constant 3.4.2 Placeholder 3.4.3 Variable(변수) 3.5 기초 수학 연산 3.5.1 스칼라 덧셈 3.5.2 텐서플로에서 제공하는 다양한 함수 3.5.3 리덕션(Reduction) PART 2 딥러닝에 필요한 수치해석 이론 Chapter 04 최적화 이론에 필요한 선형대수와 미분 4.1 선형대수 4.1.1 교육과정에 따른 선형대수의 방향성 4.1.2 정의 및 표기법 4.1.3 벡터/벡터 연산 4.1.4 행렬/벡터 연산 4.1.5 행렬/행렬 연산 4.1.6 선형시스템의 풀이 4.2 딥러닝에서 자주 사용되는 선형대수 표기법 4.3 미분과 그래디언트(Gradient) Chapter 05 딥러닝에 필요한 최적화 이론 5.1 딥러닝에 나타나는 최적화 문제 5.2 최적화 문제의 출발 5.3 최적화 문제 표현의 독해법 5.3.1 제곱값의 합을 이용한 선형회귀 5.3.2 절댓값의 합을 사용한 선형회귀 5.4 다양한 딥러닝 모델과 최적화 문제 미리보기 Chapter 06 고전 수치최적화 알고리즘 6.1 수치최적화 알고리즘이 필요한 이유 6.2 수치최적화 알고리즘의 패턴 6.3 그래디언트 디센트(Gradient Descent) 6.3.1 예제로 배우는 그래디언트 디센트 6.3.2 그래디언트 디센트 방법의 한계점 6.4 그래디언트 디센트를 사용한 선형회귀 모델 학습 6.4.1 선형회귀 문제 수식 소개 6.4.2 그래디언트 디센트 방법 적용 6.4.3 한계점 Chapter 07 딥러닝을 위한 수치최적화 알고리즘 7.1 스토캐스틱 방법(Stochastic method) 7.2 스토캐스틱 방법의 코드 구현 패턴 7.3 탐색 방향 기반 알고리즘 7.3.1 스토캐스틱 그래디언트 디센트 방법 7.3.2 모멘텀/네스테로프 방법 7.4 학습률 기반 알고리즘 7.4.1 적응형 학습률 방법의 필요성7.4.2 Adagrad 7.4.3 RMSProp(Root Mean Square Propagation) 7.4.4 Adam PART 3 텐서플로를 사용한 딥러닝의 기본 모델 학습 Chapter 08 선형회귀 모델8.1 예측 모델과 손실함수8.2 결정론적 방법과 스토캐스틱 방법8.2.1 결정론적 방법 8.2.2 스토캐스틱 방법 8.3 비선형회귀 모델 8.3.1 이차 곡선 데이터 8.3.2 삼차 곡선 데이터 8.3.3 삼각함수 곡선 데이터 8.4 비선형 특성값 추정 방법과 신경망 모델Chapter 09 선형 분류 모델 9.1 이항 분류 모델 9.1.1 연속 확률 모델 9.1.2 최대우도법과 크로스 엔트로피 9.1.3 미니 배치 방법을 통한 모델 학습 9.1.4 특성값을 이용한 비선형 분류 모델 9.2 다중 분류 모델 9.2.1 소프트맥스(Softmax) 9.2.2 원-핫(One-hot) 인코딩 9.2.3 다중 분류 모델의 크로스 엔트로피 9.2.4 미니 배치 방법을 통한 모델 학습 9.2.5 MNIST Chapter 10 신경망 회귀 모델 10.1 신경망 모델의 필요성 10.2 신경망 모델 용어 소개 10.3 신경망 모델 구현 10.4 신경망 모델의 다양한 표현 10.5 특성값 자동 추출의 원리 10.6 신경망 모델의 단점 Chapter 11 신경망 분류 모델 11.1 신경망 분류 모델의 필요성 11.2 다양한 데이터 분포와 신경망 분류 모델 11.2.1 신경망 분류 모델 학습 11.2.2 체커보드 예제 11.2.3 불규칙한 데이터 분포 예제11.3 신경망 분류 모델의 다양한 표현 11.4 MNIST 분류 문제 PART 4 학습용/테스트용 데이터와 언더피팅/오버피팅Chapter 12 언더피팅/오버피팅 소개 12.1 딥러닝 모델과 함수 12.2 학습용 데이터와 정답함수 12.3 정답함수와 테스트용 데이터 12.4 언더피팅/오버피팅의 2가지 요인 Chapter 13 언더피팅의 진단과 해결책 13.1 학습 반복 횟수 재설정 13.2 학습률 재설정 13.3 모델 복잡도 증가 13.4 언더피팅된 신경망 분류 모델 13.5 언더피팅 요약 Chapter 14 오버피팅의 진단과 해결책 14.1 학습 반복 횟수 줄이기 14.2 Regularization 함수 추가 14.2.1 L2 Regularization 14.2.2 L1 Regularization 14.3 드롭아웃(Dropout) 14.4 분류 문제 14.5 교차검증 데이터의 등장 Chapter 15 텐서보드(TensorBoard) 활용 15.1 그래프 그리기 15.2 히스토그램 그리기 15.3 이미지 그리기 15.4 신경망 모델 학습 과정에 텐서보드 적용하기 Chapter 16 모델 저장하기와 불러오기 16.1 저장하기16.2 불러오기 16.3 오버피팅 현상 해결 응용 예제 Chapter 17 딥러닝 가이드라인 17.1 딥러닝 프로젝트 진행 순서 17.1.1 모델과 손실함수 선택 17.1.2 모델 학습 진행 17.1.3 언더피팅 확인 17.1.4 오버피팅 확인 17.1.5 최종 성능 확인 17.2 딥러닝 학습의 근본적 한계 17.2.1 손실함수에는 학습용 데이터뿐이다. 17.2.2 데이터 전처리는 매우 중요하다. 17.2.3 손실함수와 정확도는 다르다. 17.2.4 테스트 데이터의 분포는 완전히 알 수 없다. PART 5 딥러닝 모델 Chapter 18 CNN 모델 18.1 딥러닝(Deep Learning) 이란 18.2 CNN 모델 소개 18.3 콘볼루션(Convolution) 18.3.1 커널(Kernel)/Filter 18.3.2 Strides 18.3.3 Padding 18.4 Max-Pooling 18.5 Dropout 18.6 ReLU 활성 함수 18.6.1 사라지는 그래디언트 문제 18.6.2 문제의 이해 18.6.3 문제의 원인 18.6.4 해결 18.7 자동 특성(Feature) 추출 18.8 MNIST 숫자 분류 문제 18.8.1 데이터 훑어보기 18.8.2 One-Hot 인코딩 18.8.3 CNN 모델 구축하기 18.8.4 최적화 문제 설정 18.8.5 하이퍼 파라미터 설정 18.8.6 학습 시작 18.8.7 정확도 확인 18.8.8 전체 코드 Chapter 19 GAN(Generative Adversarial Networks) 모델 19.1 min-max 최적화 문제 소개 19.2 Generator(생성기) 19.2.1 Variable Scope(변수 범위) 19.2.2 Leaky ReLU(누설 ReLU) 19.2.3 Tanh Output19.3 Discriminator(판별기) 19.4 GAN 네트워크 만들기 19.4.1 Hyper parameters 19.5 손실함수 19.6 Training(학습) 19.6.1 Training(학습)의 세부 조건 설정 19.6.2 Training loss(학습 손실) 19.6.3 생성기로 만든 샘플 영상 19.6.4 생성기로 새로운 영상 만들기 19.7 유용한 링크 및 전체 코드 19.7.1 유용한 링크 19.7.2 전체 코드 PART 6 응용 문제 Chapter 20 영상 20.1 Transfer Learning 소개20.2 꽃 사진 분류 20.2.1 필요한 사전 지식 20.2.2 환경 준비 20.2.3 문제 소개 20.2.4 VGG16 모델 20.2.5 데이터 훑어보기 20.2.6 모델 만들기 20.2.7 최적화 문제 설정 20.2.8 하이퍼 파라미터 설정 20.2.9 학습 20.2.10 정확도 20.3 Bottleneck 특성 추출 방법 20.4 Transfer Learning 전체 코드 Chapter 21 문자열 분석 word2vec 21.1 Word Embeddings 21.2 One-hot encoding 21.3 Word2Vec 모델 21.3.1 환경 준비 21.3.2 전처리(preprocessing) 21.3.3 SubSampling21.3.4 배치 만들기 21.3.5 그래프 만들기 21.3.6 Embedding(임베딩) 21.3.7 Negative sampling 21.3.8 Validation 21.3.9 Training 학습 21.4 T-SNE를 이용한 시각화 21.5 전체 코드 찾아보기

.png)